DevOps, and particularly Continuous integration (CI) and continuous delivery (CD), are terms which has been adopted widely and over the past few years in the software development industry. It’s use is currently creeping outside the scope of development and into various departments wanting to decrease long delivery cycles and increase product iteration. For those unfamiliar with the terms in the software space, or premise behind the idea: CI/CD is essentially the automation of the delivery of your software development projects into production and the broader goal is to bring Development and Operations closer together. In this blog we will mainly focus on CD as it pertains to HANA XS development.

Inside SAP IT, and like a lot of other IT departments, we are trying to increase and simplify the deployment of our HANA XS projects and move to a more agile approach in delivering solutions for our internal customers.

Firstly, some background: As many of the XS Developers out there know, the HANA repo is not necessarily the easiest “file system” to work with. The fact that data is stored in the DB, in a propriety format and each file needs to be activated, makes it tough to automate basic operations like moving or copying. In order to work around this topic, we decided that all of our code deployments were going to be done using HANA’s preferred native “packaged” file type known as delivery units (DU). These contain the entire active code base of a project (or subset of a project), changed as well as unchanged files.

In the past we manually deployed code to each of our instances individually, this required manual intervention and developer/operations availability which we hoped we could streamline. The DU import process we decided to use is a feature which was introduced in SPS09 through a command line tool called HDBALM. This allows any PC which has the HANA client installed to import packages and delivery units to a HANA server. While there are options to commit and activate individual files from a traditional file system folder system (using the FILE API), we felt the benefits of a DU were better suited to our use case (for example, hdb* objects which may need to be created in specific order).

Since we have the ability to deploy our code to our various servers using HDBALM, we needed something to get the job done! We used our internal instance of Atlassian Bamboo. We use this server for our HCP HTML5 and Java apps which make it a logical choice to keep our projects grouped to together. Our build agents are redhat distros and have the HANA client installed. We also copy over the SSL cert since our hana servers are using https and these are needed for hdbalm.

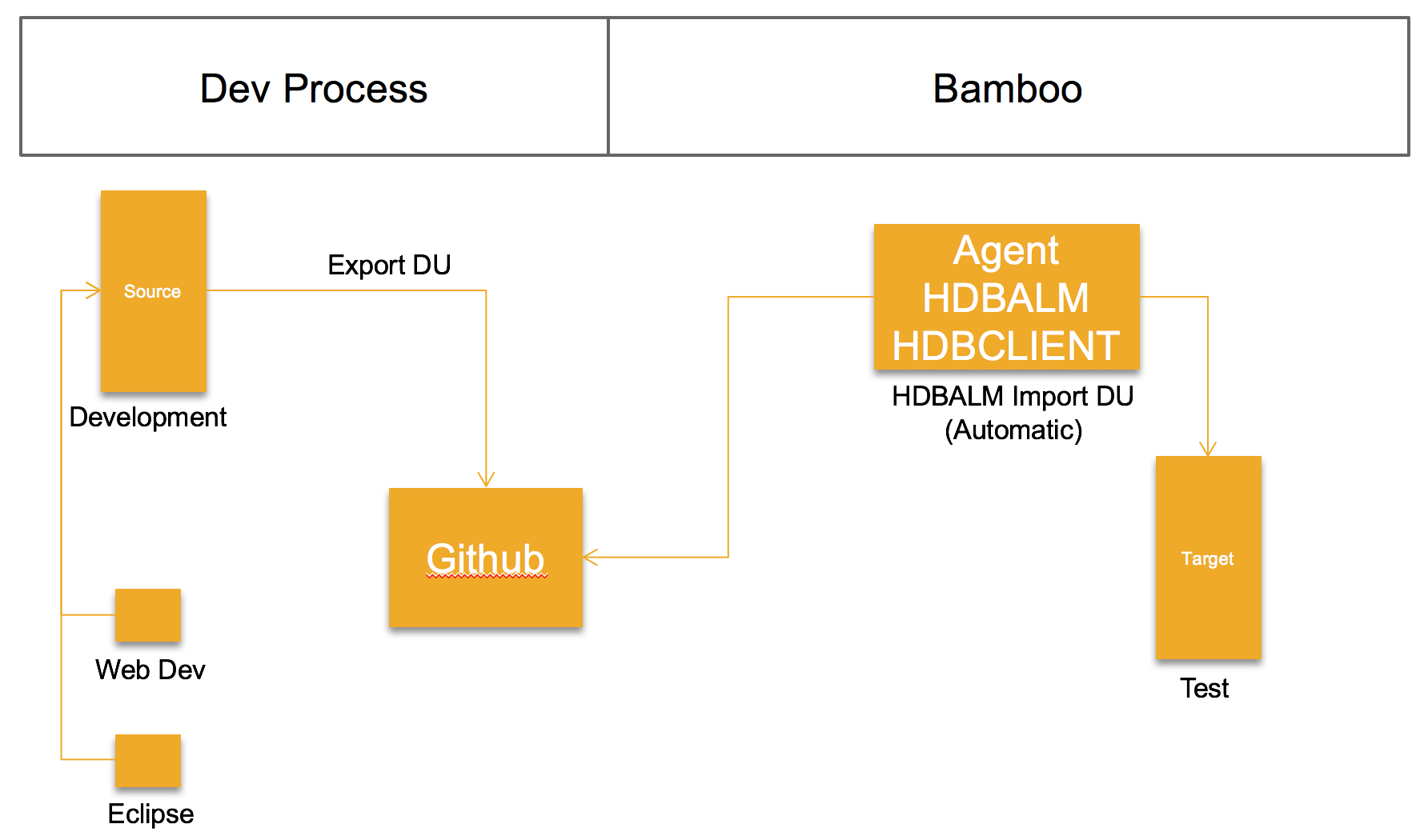

In this case, and example, our landscape is relatively simple with a Dev, Test and Production dedicated HANA HCP instances.

We store our “ready for deployment” delivery unit on our Internal Github instance, we do this so that the file is version controlled and also visible and accessible to our dev team. The premise is that the dev team would push a version of a delivery unit after their sprint, and its ready for deployment to Test. This could easily be a file system as well. However, we like to use the push/tag/release of our Github repo to trigger the build deployment.

FYI: Bamboo is a great tool and a nearly zero cost barrier ($10). If you are considering a build server which has a quick and easy installation (*nix) and setup, I would highly recommend it.

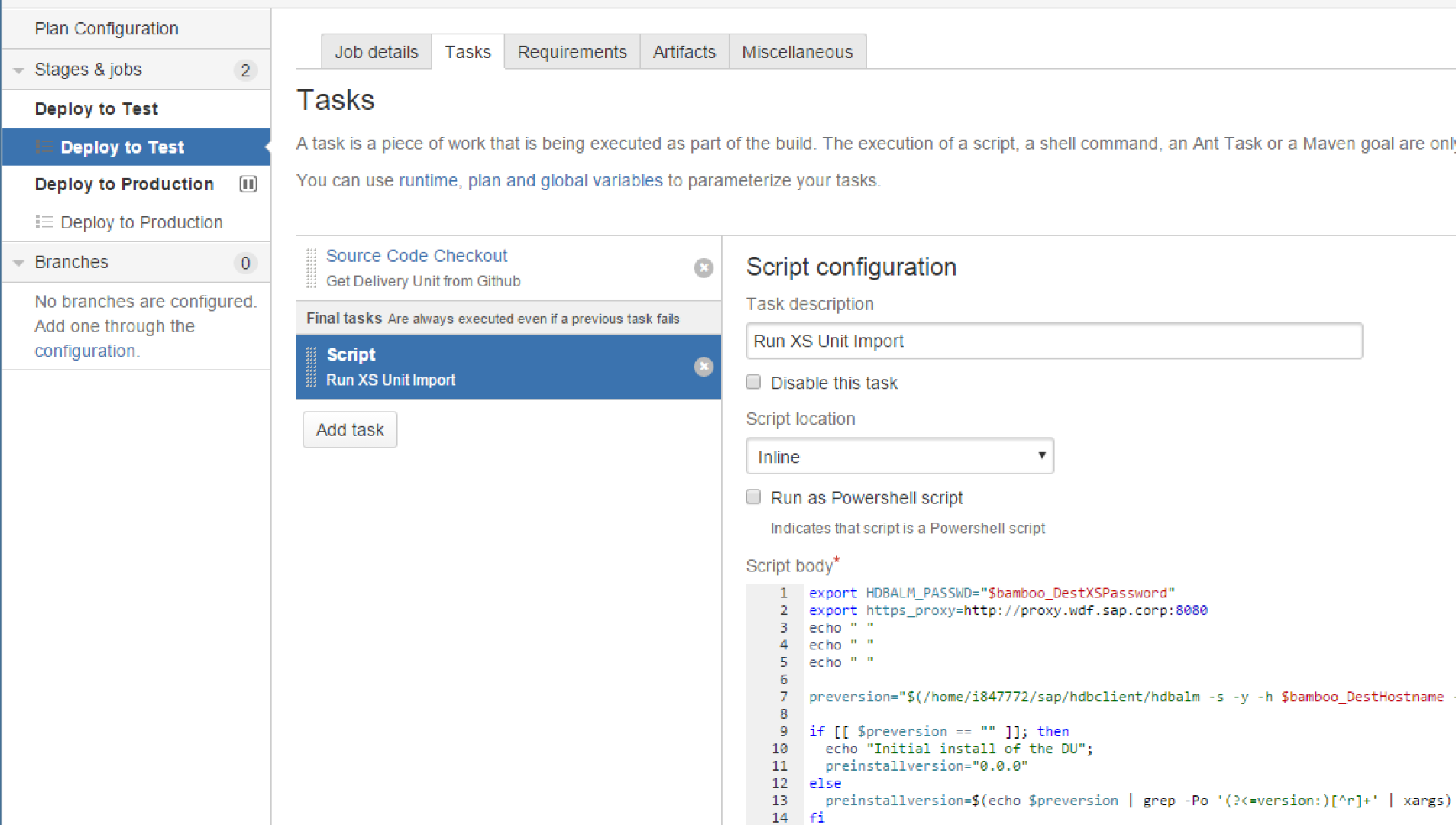

Since we have gone through some of the logical details, here are some technical details covering the topic:

As mentioned previously, our build process is trigged by a new delivery unit being committed to our github repo. Our bamboo build agent picks the build up, saves it on the build server and deploys it to our Test instance. A email is sent to our dev and test team with the results. Before the import process, we check the version identifier on the existing delivery unit which was on the server, and we subsequently check it again after the import for comparisons and decide if the import was successful (along with the results of the import statement)

(Keep in mind the below commands include $bamboo_variables) but would work just fine to replace them with actual values.

You can find the code here in a github gist (where it will be maintained)

export HDBALM_PASSWD="$bamboo_DestXSPassword"

export https_proxy=http://proxy.wdf.sap.corp:8080

echo " "

echo " "

echo " "

preversion="$(/home/i847772/sap/hdbclient/hdbalm -s -y -h $bamboo_DestHostname -p $bamboo_DestHostPort -u $bamboo_DestXSUsername -c $bamboo_DestSSLCert du get $bamboo_DeliveryUnitName $bamboo_DeliveryUnitVendor)"

if [[ $preversion == "" ]]; then

echo "Initial install of the DU";

preinstallversion="0.0.0"

else

preinstallversion=$(echo $preversion | grep -Po '(?<=version:)[^r]+' | xargs)

fi

echo "Pre Install version: $preinstallversion"

IMPORT_LOG="$(/home/i847772/sap/hdbclient/hdbalm -s -y -j -h $bamboo_DestHostname -p $bamboo_DestHostPort -u $bamboo_DestXSUsername -c $bamboo_DestSSLCert import "$bamboo_DeliveryUnitFilename")"

postversion="$(/home/i847772/sap/hdbclient/hdbalm -s -y -h $bamboo_DestHostname -p $bamboo_DestHostPort -u $bamboo_DestXSUsername -c $bamboo_DestSSLCert du get $bamboo_DeliveryUnitName $bamboo_DeliveryUnitVendor)"

if [[ $postversion == "" ]]; then

echo "Unable to query installed delivery unit version"

postinstallversion="-1"

else

postinstallversion=$(echo $postversion | grep -Po '(?<=version:)[^r]+' | xargs)

fi

echo "Post Install version: $postinstallversion"

export HDBALM_PASSWD=""

LOG="${IMPORT_LOG##* }"

if grep -q "Successfully imported delivery units" $LOG && [[ $postinstallversion == $preinstallversion ]]; then

echo " "

echo " "

echo "******************************************************* Import of the DU completed, but the version has not changed *******************************************************"

echo " "

echo "Its possible you have not incremented the version numbers"

echo " "

echo "******************************************************* Log File $LOG *******************************************************"

echo " "

echo " "

if [ $LOG != "" ]; then

cat $LOG

else

echo "No log file, ensure the job is running on a machine with HDBALM"

fi

echo " "

echo " "

echo "******************************************************* //Log File *****************************************************"

echo " "

echo " "

exit 0

elif [ $postinstallversion == "-1" ]; then

echo " "

echo " "

echo "******************************************************* Import of the DU Has failed *******************************************************"

echo " "

echo "******************************************************* Log File *******************************************************"

echo " "

echo " "

if [ $LOG != "" ]; then

cat $LOG

else

echo "No log file, ensure the job is running on a machine with HDBALM"

fi

echo " "

echo " "

echo "******************************************************* //Log File *****************************************************"

echo " "

echo " "

exit 1

else

echo " "

echo " "

echo "******************************************************* Import of the DU has completed successfully *******************************************************"

echo " "

echo "Installation successful"

echo " "

echo " "

exit 0

fi

exit 0

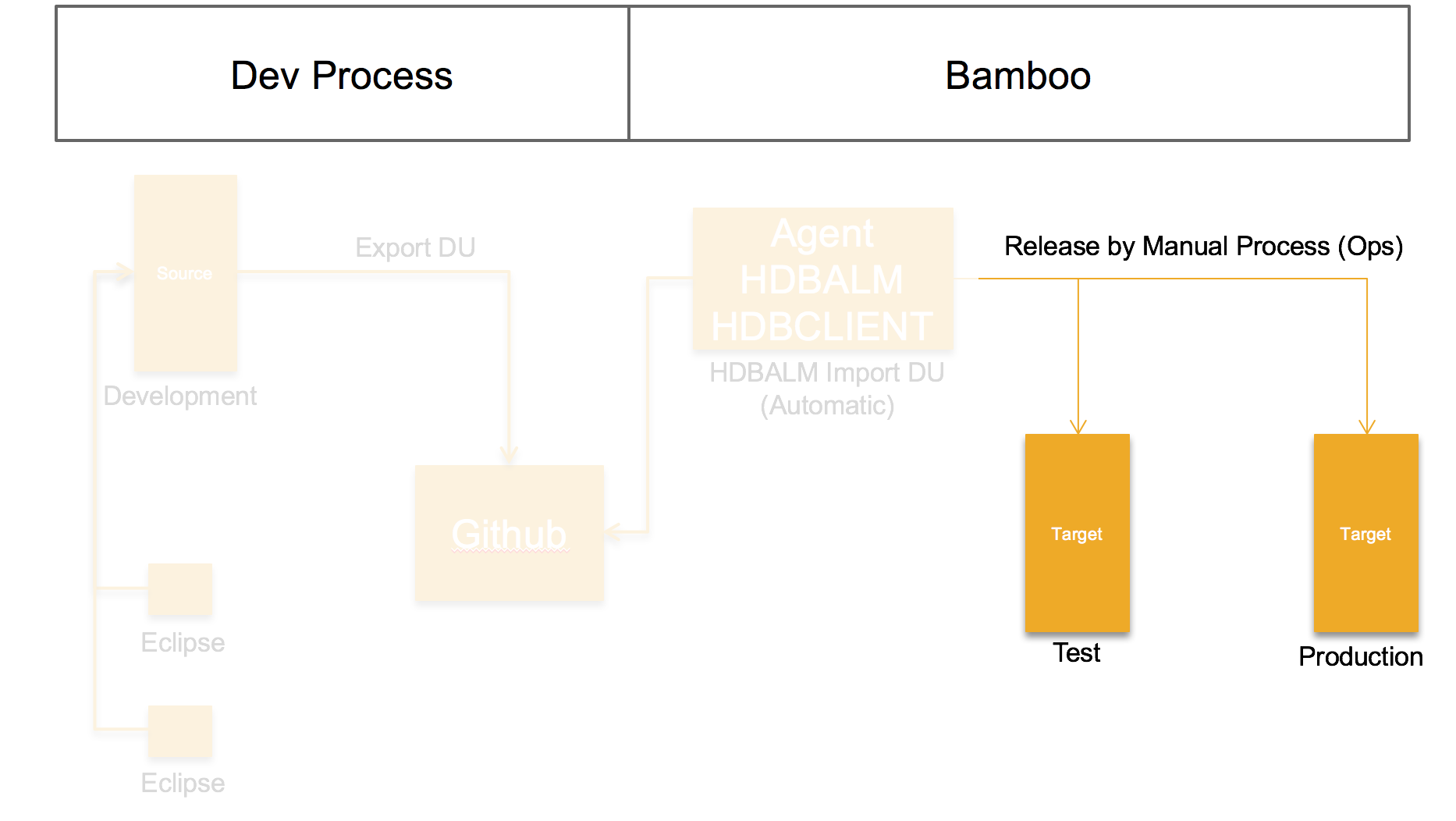

Once our release has been tested on our test environment and are ready to production deployment, we use the deploy function of Bamboo to deploy the Delivery unit to our production instance. This process is identical to test, with the exception that the delivery unit is not pulled from github but rather the the build job artifacts (since the code we want to release, might not be the code in our dev repo du currently). In this case we always specify the version of the Dev to Test build job.

Our deployment to production is (currently) not done by developers,

but our operations team. This is still performed in bamboo.

Bamboo screenshot (just for reference)

Devops on HANA XSA Projects

Since we no longer have the HANA repository on XSA, our build jobs/processes are going to be greatly simplified and very similar to traditional projects on the new platform. (Awesome!)

If you have comments, questions or suggestions we are always interested to hear and share more around these topics.